Top GPUs for machine learning workstations

2/4/2022 5:39 PM

While constructing your ideal workstation, choosing the right components is critical. Depending on the type of machine learning, neural net, and models you’ll be using, it might be possible to get away with just a powerful CPU. But models are incredibly simple with hundreds of parameters. For serious work, it’s better to obtain a powerful GPU or multiple. But there is a catch: The Great Chip Shortage! Scientists, consumers, gamers, and system integrators (SI) are embroiled in a struggle to acquire these powerful pieces of hardware.

Taking that into consideration, we will do our best to maximize your options. However, the final decision of which GPU best fits your needs can either be up to you or an SI.

To start at the beginning, you need a solid basis for the GPUs, which means a CPU and motherboard. If you don’t have one yet, learn how to choose a good CPU and motherboard like an expert for your workstation.

GPUs are the kings of AI and machine learning

There is no denying that a GPU or specialized FPGA will be several orders of magnitude faster than a CPU for almost any given ML workload. They have more specialized libraries to enable efficient utilization of a GPU’s resources. Capable Tensor cores excel at performing lower accuracy calculations needed for neural nets. And not to mention they are energy efficiency thanks in part to the greater parallelization of the entire process.

Source: Fuse.ai

Even before the chip shortage, a powerful GPU was a capital investment. Whether it's one for a single scientist or thousands for institutes and laboratories, GPUs present a daunting bill. Here are a few parameters to keep in mind:

- Single and half precision calculation speed – Machine learning usually doesn’t require high levels of precision for analyzing language or image models, so you can eek out some more performance by dropping the precision a few notches.

- VRAM capacity – This is a limiting factor for the type of algorithm you can run on your GPU/s as some will require more than 24GB of VRAM. Therefore, your choice of GPU is limited to the upper tier.

- Memory bandwidth – In general, the higher the better.

- Your Libraries – Your selected card/s should support the libraries you intend to use for your project or line of work.

A machine learning GPU for almost every wallet

It’s important to note that you can get GPUs on the cheap (and there are some good deals out there), but you will most likely be looking at thousands of dollars. So, in order to make it a touch easier, we will be dividing them into four categories according to their capabilities and price points:

- The budget option: NVIDIA RTX 3080 (NVIDIA Founders Edition, ASUS Strix, EVGA FTW3 etc.)

- A solid mid-range: NVIDIA RTX 3090, or RTX 6000>

- Top-tier: NVIDIA RTX 8000 >

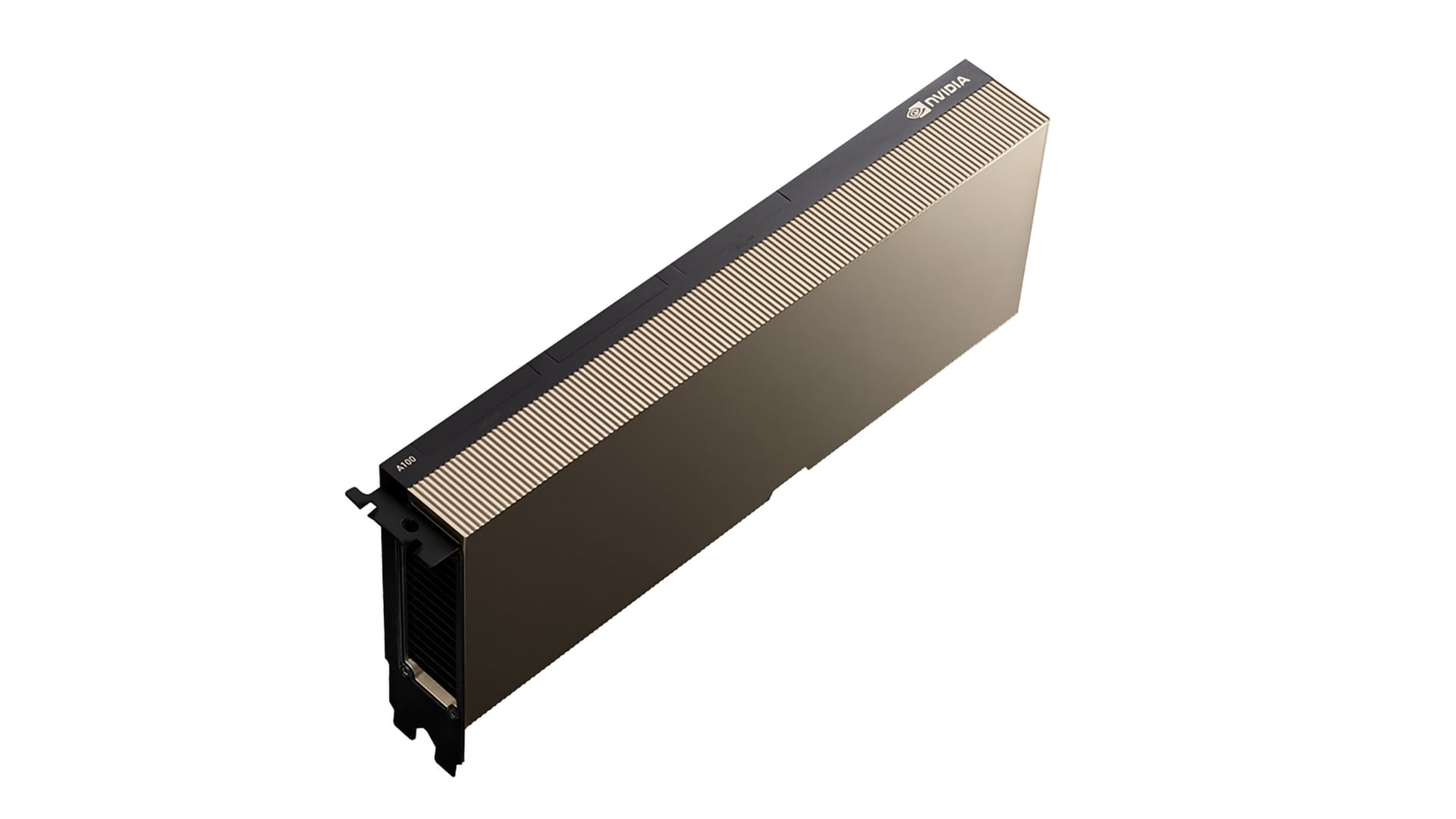

- Enterprise grade: NVIDIA V100 or A100 >

Source: NVIDIA

You can go lower than the RTX 3080, for example with some of the older RTX cards like the RTX 2080 or 2080Ti. However, you will encounter somewhat lower performance and lower amounts of VRAM which can and will limit the algorithms you can run on your system.

Starting with the mid-range cards, you will see 24GB of VRAM, which can run all types of algorithms and data sets. They are quite capable GPUs and if you are looking to upgrade the performance, you might be better off getting 2 or more of them instead of getting one from the higher price bracket.

Source: NVIDIA

The top-tier and enterprise options are mostly reserved for System Integrators and server manufacturers as vendors won’t sell them to the general public. That’s not even taking into account the eye-watering price tag of tens of thousands of dollars. But if you are in need of this heavy hitting hardware, we advise you take a look at our range of compute-oriented workstations.

Compute density problems

Cramming enough GPUs and other supporting hardware in a relatively portable chassis poses its own set of problems. Air-cooled solutions limit the maximum number of GPUs per standard system to 4 or fewer. This is due to the fact that air coolers need to be directly attached to the GPU to perform their role.

No matter how efficiently the fans cut and move the air through a heatsink, it is simply not enough. And not to mention, the noise output. But physics and engineering be praised! Liquid cooling is ready to take center stage and provide the perfect solution.

Liquid cooling technology

At its base, liquid cooling is just coolant being pumped around a heat source. GPUs and CPUs can be equipped with water blocks that relocate the heat energy to the radiator. The radiator then dissipates the gathered heat. Water and glycol-based coolants have excellent heat transfer and capacity properties, which allow it to be up to 10x more efficient compared to air cooling.

Effective utilization of liquid cooling requires some extra engineering and components, but it is worth it in the end. But there are countless benefits of liquid cooling that anyone who works with machine learning workstations should know.

The right GPU can have a huge impact on your work and productivity. Hopefully, you will be able to choose the best GPU for your workstation and machine learning needs. If you are more familiar with AI workstations, be sure to check out the next article in our series. .